Table of content

Every business has its own version of rush hour: approval queues, duplicate files, manual inputs choking progress. Intelligent Automation clears the lanes. Documents route instantly. Data flows to decision points. Skilled employees stop wasting time on routine clicks and start moving the business forward. At its core, Intelligent Automation runs on AI models engineered for execution — models that adapt, trigger actions, and orchestrate flows across finance, healthcare, logistics.

2025 moves fast. Automation gives you a green light while others idle. This guide breaks down the execution layer — from event-driven models to cross-system coordination. We’ll show you how to design for speed — and scale it.

Why 2025 Demands Precision

Instead of overhauling entire systems, successful implementation of Intelligent Automation leaves these systems intact while reconfiguring how they interact. When models interpret documents, validate data, resolve exceptions, and route decisions directly through APIs, the process no longer relies on follow-ups and escalations. Tasks that used to require three hand-offs between teams now complete within the workflow itself.

To take it further, precision improves when automation focuses on the slowest, most judgement-intensive parts of a process. This creates a tighter loop between input, action and outcome, resulting in a system that continuously improves.

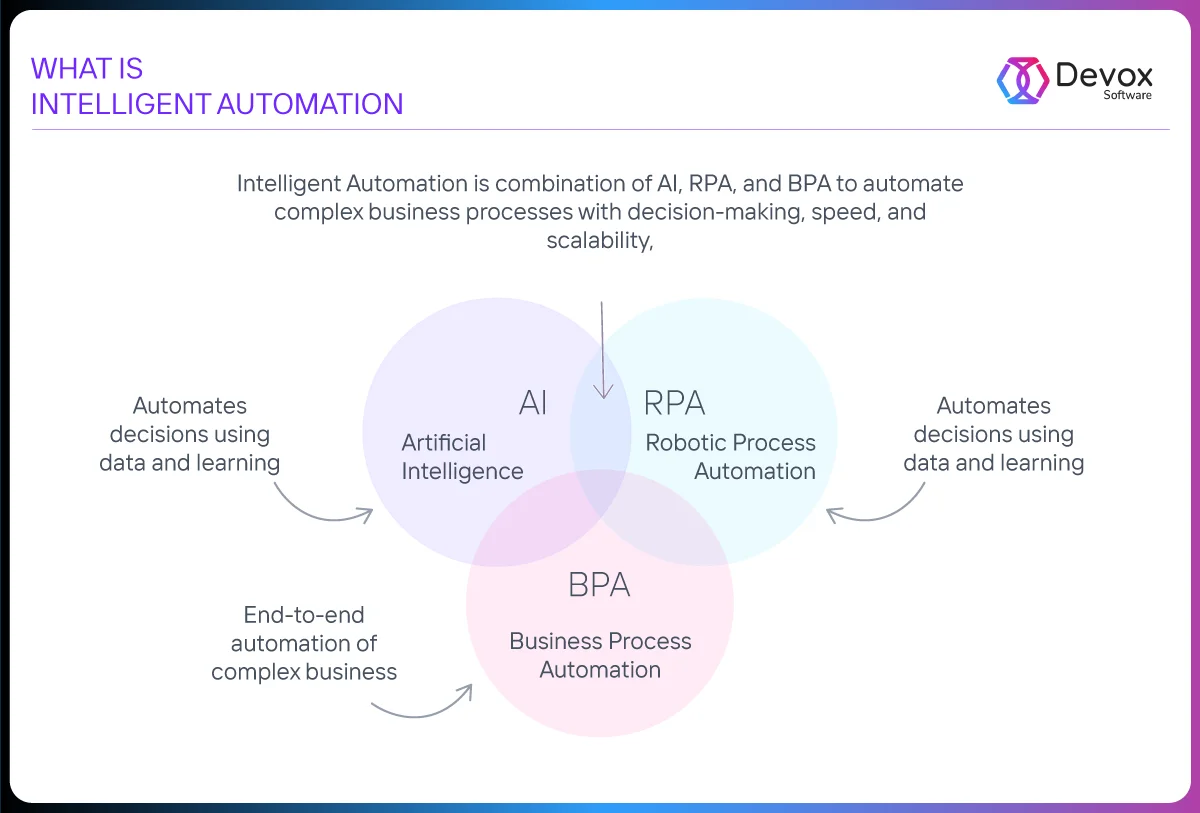

To understand how this works under the hood, it helps to break Intelligent Automation into its core components. It’s not a single tool — it’s a synthesis of three layers:

- Artificial Intelligence (AI),

- Robotic Process Automation (RPA),

- and Business Process Automation (BPA).

Together, they enable speed, scalability, and decision-making at scale. The diagram below shows how these elements connect to form a unified system.

For businesses across different industries, this does not feel like an upgrade. It’s an inflection.

Where Execution Meets Intelligence

Intelligent Automation in 2025 performs with embedded logic. It reads inputs as data and context. It classifies, recalls, resolves, and acts — inside the operational frame.

For instance, a query enters through natural language. The system:

- Resolves intent using domain-trained classifiers.

- Context memory tracks previous interactions and constraints.

- Language models extract relevant entities, validate patterns, and trigger execution across internal APIs.

As such, routing logic selects the proper downstream workflow, submits the form, and logs the outcome.

To break it down further, one model classifies intent across high-volume flows; another holds onto context across interactions, remembering what matters from one turn to the next. Language models no longer trip over phrasing — they adapt to ambiguity, handle edge-case syntax, and stay stable across service layers. The result is action: filing claims, fetching records, escalating exceptions — all as part of the system’s natural rhythm.

Where to Start: High-Leverage Use Cases by Industry

Intelligent automation is most effective when manual handoffs align with machine-readable rules.

Finance: Claims processing, Fraud Detection, KYC Automation

Speed builds trust. In financial transactions, every second that elapses between request and processing increases the risk — operational, regulatory, reputational.

That’s why automation matters. Document classifiers analyze scanned IDs, utility bills and proof of income with 98% field-level accuracy. Entity matchers check customer records against internal and third-party databases and resolve them in different languages.

In parallel, routing models assess the risk during the process. Cases with small deviations get resolved automatically. Exceptions appear with pre-marked anomalies and confidence intervals and are ready for validation by the auditor.

What’s more, fraud detection no longer trails the process — it leads it. Behavioral models track sequences, not events: transaction velocity, cross-border logic breaks, device entropy. Detection acts on deviation, trained and contextual.

Meanwhile, KYC evolves from checklist to system. Each stage flows through a pipeline that keeps state, tracks intent, and maintains confidence. Memory-enabled orchestration means every validation call knows what came before, and what constraint it must uphold.

| Workflow Moment | Automation Move | Immediate Win | KPI Impact (2025 Benchmarks) |

| Claims Intake: health, P&C, loan defaults | OCR + Doc Classifier extracts 30-plus fields at 98% accuracy | Payout decision flows in < 40 min rather than multiple hours | • Cycle time reduced by 60% • Manual touches per claim ↓ 45% |

| Receivables Routing: invoices, remittance data | Risk-scoring model steers high-confidence items straight through | Finance closes cash gaps days sooner | • DSO (Days Sales Outstanding) ↓ 8 days avg • Throughput per clerk ↑ 2 × |

| Fraud Pattern Guard: cards, ACH, transfers | Sequence model watches velocity, geo spread, device entropy | High-risk events halted in stream, clean ones glide forward | • False positives ↓ 40% • Fraud loss ratio ↓ 25% |

| KYC Onboarding: IDs, proof of address, AML lists | Entity matcher links docs across languages & formats | Customer verified in < 5 min, compliance teams focus on edge cases | • Manual reviews per 1 000 clients ↓ 50% • Onboarding abandonment ↓ 30% |

| Audit & Compliance Trail | Orchestrator logs model ID, confidence, route, outcome | Regulators receive end-to-end lineage on demand | • Prep time for audits ↓ 70% • Fine exposure curve bends toward zero |

Logistics: Exception Handling, Delivery ETA Optimization

Execution starts at intake. In logistics, that means scanned invoices, variable packing slips, handwritten shipment notes. A FastAPI pipeline processes each document directly — classifies format, extracts values, and normalizes structure using OCR calibrated for field-level inconsistency. Each input goes in cleanly, without depending on predefined templates.

From there,

- Parsed data flows straight into orchestration. Invoice details align with order metadata.

- Certificate templates adapt by country.

- Routing logic calculates based on cargo type, destination, and compliance schema.

When issues arise, every exception enters a defined channel. Classification models assign cause codes — incomplete fields, currency mismatch, duplicate line items. Each issue comes with context, enters a resolution queue, and kicks off SLA tracking. The system assigns ownership and initiates next steps without delay.

To maintain delivery precision, ETA models combine real-time and historical inputs — GPS signals, customs records, warehouse scans, route history.

Forecasts update continuously. As timelines shift, downstream systems recalibrate: warehouse staging, customer notification, delivery load-balancing — each linked to state change, each logged, each measurable.

Work moves without idle.

Now the real throughput story unfolds:

| Workflow Moment | Automation Move | Immediate Win | KPI Impact (2025 Benchmarks) |

| Inbound Docs: invoices, packing lists, export forms | OCR + Document Classifier turns any PDF into structured data | No hand-typing, no template hunting | • 8-12 min saved per file • Data-entry errors ↓ 90% |

| Order Alignment: match invoice to order in ERP | Entity Resolver links SKUs, values, and Incoterms automatically | Single source of truth across finance & warehouse | • Duplicate records eliminated • Disputes per order ↓ 65% |

| Regulatory Prep: export certificates, customs codes | Rule-based generator selects the right form for 34 jurisdictions | Paperwork ready before truck leaves the dock | • Clearance delays nearly zero • On-time departure ↑ 22% |

| Exception Handling: weight gaps, currency mismatches | Anomaly Classifier routes issues with cause tag and SLA timer | Staff reviews only items that truly need judgment | • Manual touches per 1 000 orders ↓ 50% • Recovery time per error ↓ 60% |

| Live ETA Updates: traffic, border queues, scans | Forecast Model recalculates ETA and pushes updates downstream | Warehouse and customer see the same realistic arrival time | • ETA variance held within ±4% • Dock idle hours ↓ 18% |

| End-to-End Visibility: every step logged | Event-driven orchestration writes a complete audit trail | Instant trace for compliance or root-cause dives | • Audit prep time cut by days • Issue-to-resolution cycle ↓ 40% |

Manufacturing: Predictive maintenance, QA automation, inventory planning

Each production cycle carries exposure. Load shifts, tool wear, and feed delays — minor variances compound into yield loss. This is where Intelligent Automation steps in, equipped with sequence awareness:

- Telemetry streams from line-side sensors.

- Models track the acceleration curve, thermal drift, and vibration spectrum.

- Maintenance commands fire on signal shape — embedded inside the cycle, scheduled before performance degrades.

Meanwhile, quality doesn’t depend on sampling. Vision models scan every edge, every weld, every contour. Each part becomes a decision point:

- Surface defects trigger reclassification.

- Deviation from dimensional spec activates reroute logic.

- Every decision is logged, tied to the batch, timestamp, and operator.

- Feedback moves upstream as model adjustment.

- QA becomes a loop — self-correcting, time-bound, intensely local.

On the inventory side, planning evolves beyond static reorder points. For instance, consumption rate is reflected in real-time inventory graphs. Supplier reliability scores and historical variance influence part velocity, and replenishment requests run through orchestration — volume is driven by urgency, and supplier mix is shaped by constraints.

The production floor moves without intervention and the system learns as it goes. In short, this is where precision translates into measurable return.

| Workflow Moment | Automation Move | Immediate Win | KPI Impact (2025 Benchmarks) |

| Line Health: motors, bearings, drives | Predictive-maintenance model watches vibration, heat, and load in real time | Service happens before a fault, not after a stop | • Unplanned downtime ↓ 25-40% • Maintenance cost per shift ↓ 18% |

| Quality Check: edges, welds, surfaces | Vision AI inspects every part, every pass | Defects flagged instantly; bad units never leave the line | • Rework rate held < 1% • Customer returns ↓ 35% |

| Tool Wear Feedback: drill bits, cutters, dies | Sensor signals trigger micro-adjustments or tool swap | Dimensional drift corrected inside the cycle | • Yield loss ↓ 12% • Scrap cost per batch ↓ 30% |

| Inventory Pulse: raw, WIP, finished goods | Consumption + velocity model updates stock graph live | Reorder size and timing adapt to actual demand | • Stock-outs cut to near zero • Excess inventory days ↓ 20% |

| Supplier Mix: reliability vs. urgency | Orchestrator chooses vendor based on lead-time score | Parts arrive when needed, at optimal cost | • Rush-order fees ↓ 28% • On-time supply ↑ 15% |

| Continuous Learning Loop: log → adjust | Every outcome feeds the next model retrain | Floor conditions inform tomorrow’s parameters | • Process drift caught within hours

• OEE (Overall Equipment Effectiveness) ↑ 8-10% |

The Stack Behind the Scenes: What Powers IA

Under the hood of Intelligent Automation — gear by gear. Each action node runs on a decision engine — scoped, modular, latency-aligned. This stack doesn’t visualize. It moves.

1. Language Models Operate Upstream

At the point of entry, LLMs absorb ambiguity at entry. A scanned export declaration. A support ticket with five nested requests. A chat message in mixed syntax. Sequence starts here — with OCR transforming layout to text, and transformer layers resolving structure from entropy:

- The system first processes input text with custom-trained AI models, then labels each piece with its required action, applicable rules, and relevant context.

- Named entities extract with reference anchoring.

- Intent routes through beam-constrained classifiers — weighted by jurisdiction, confidence entropy, and process availability.

Critically, memory persists across calls. Agents recall entity states, decision paths, fallback outcomes. Each execution carries lineage — text source, model inference, trigger index.

In other words, these aren’t just prompt completions. They’re instructions anchored in inference — contract resolution, document routing, compliance enrichment — executed through orchestration without human gatekeeping.

2. Context Memory Holds Execution State

This is where the plot thickens. Execution unfolds in sequence, and memory governs the boundary between steps. Context modules hold entity state, constraint logic, and resolution lineage across calls. This layer doesn’t store messages. It structures transitions.

Each invocation receives enriched prompt input: current instruction plus memory-bound vectors — prior action index, constraint graph, entity history, fallback route. Context summarization distills long-form exchanges into state vectors. These summaries enter as invisible scaffolding — informing model behavior without token inflation.

Beyond summarization, session memory extends into vector retrieval. Domain-specific embeddings link customer IDs, document patterns, prior intents. Lookup returns high-relevance payloads — match-scored, date-ranked, context-filtered — and injects them into execution stream. No repetition, no re-entry.

As a result, agentic flows run on memory as substrate:

- Multi-step tasks anchor to previous instruction state.

- Corrections rebind entities.

- Confirmations resolve constraint branches.

- Each move is guided by what has already occurred — and what remains unresolved.

It’s interesting. What flows through context isn’t dialogue. It’s intent evolution, governed and stored with surgical recall.

3. Tabular Classifiers Drive Structured Inference Mid-Flow

This is where the pieces begin to fall into place. Each decision fork within a digital process binds to a classifier. Inputs arrive structured: transaction records, extracted invoice fields, KYC data, diagnostic metrics. Models don’t evaluate in isolation.

Take a mortgage flow, for example. Applicant data enters with full table lineage: income tier, credit window, geo-risk coefficient, employment volatility index. Classifier output anchors to predefined tiers — auto-approve, conditional queue, compliance referral. Execution happens fast — with paths routing in just ~38ms across multi-model orchestration.

In support operations, inbound ticket pipelines classify inbound events based on origin trace, urgency meta-tag, issue class, and customer LTV band. Decision engines segment requests into three execution graphs — low-effort automation, adaptive reply, or tier-two transfer — scored by downstream load and SLA friction.

In fraud scenarios, classifiers run on encrypted tabular feeds: transaction stream, IP shifts, device ID mismatch, historical behavioral vector. Each frame analyzed against a pre-trained anomaly template. When drift breaches the threshold, the system launches a hold sequence.

Importantly, every inference instance is observable. Confidence scores, deviation indexes, and routing justifications enter the runtime ledger — traceable, reversible, auditable. Orchestration engines read each model output not as insight — but as executable trigger.

4. Vision Models Execute From Live Camera Input

Here’s where the real engineering happens. Inference runs on stream, not file. Cameras feed edge models directly where each frame becomes event.

On the production line, image intake splits into parallel streams: RGB, thermal, depth. Each route tagged by origin, timestamp, SKU. Vision stack parses frame-by-frame: edge detection, anomaly scoring, spatial deviation. Models run against tolerance maps — gap distance, surface uniformity, weld continuity, symmetry index.

As soon as a defect is detected, it enters the action graph. Output triggers branch: halt, reroute, notify, recheck. No rule engine. No operator step. Execution responds to variance with sub-100ms latency. Each event logged with traceable hash — model ID, version vector, camera location, product ID.

For inspection tasks, shelf-scanning systems classify SKU presence, box fill rate, orientation delta. Real-time tags write to stock graph. Gaps route reorder trigger. Overstocks initiate auto-bundling.

In inspection, surface resolution feeds semantic segmentation. Paint blur, microfracture, material misalignment — all segmented, classified, weighted. Decision goes upstream: batch quarantine, line recalibration, source review.

In Vision all layers route through orchestration, and model outputs flow as events. And this is where the rubber truly meets the road.

A 6-Step AI-First Automation Implementation Framework

The following framework outlines a practical path to executing intelligent automation in real-world systems.

Step 1. Identify Repetitive Entropy

To find these gaps, remember there are drift zones in every system. Places where the structurebecomes blurred, where identical inputs trigger different actions. Not just once — permanently. Claims with branches of responsibility. Orders with routing collisions. Diagnoses with loop warnings. These moments waste employee energy, dilute SLA integrity and fragment data sequencing.

For instance, in finance, patterns repeat: a scanned invoice enters — partial metadata, policy ambiguities, region-specific clauses. In logistics human tags “priority”, opens the ERP, and hand-routes a ticket across two systems. In manufacturing, yield loss flags early — but the signals fork.

This is entropy at scale. And every fork carries context — document lineage, constraint structure, anomaly vector, jurisdictional scope. Logging these inflections builds a volatility index for execution: frequency of divergence, impact of reroute, recurrence of manual override.

Start here. Extract your automation anchor points. Track where high-volume tasks diverge by metadata, policy, or tolerance drift. Entropy predicts opportunity.

Step 2. Structure the Entry Point

At IA core, clarity in, intelligence out. Every action begins with format.

The system absorbs friction at the threshold. Input lands — invoice scan, order email, machine log, request form. Raw. Each element holds potential, none of it aligned. In short, before automation executes, structure must emerge.

Each element holds potential, none of it aligned. In short, before automation executes, structure must emerge. OCR engines parse the field. Layout transformers align zones. Entities extract from noise: payee, sum, region, date. Every label carries a position, every value a tag, every block a context.

Language models run intake routing. Sequence tokens inform intent, constraints, fallback paths. The same sentence, two meanings — each resolved through domain-tuned prompt heads. Risk flagged by metadata; exception by pattern.

Downstream, classifiers require shape. Table fields, option lists, enums, ranges. Ideally, only structure feeds action. Free-form blurs execution. In production, each node expects payload: class, vector, timestamp, rule path.

Every workflow starts with this gate. Structure the stream. Map ambiguity to schema, and anchor every action to a format that models read, memory holds, and orchestration binds.

Step 3. Embed Classifiers into the Flow

Now, this is where it gets tricky.

Once data enters the system in a structured, tabular format — whether invoices, onboarding forms, transport documents, or sensor logs — classifiers drive execution. Models are embedded directly within business process flows. Each output is an instruction.

For instance, document classifier takes in parsed invoice fields and assigns a routing code based on document type, payment terms, and region. Approval is triggered for standard cases, while exceptions are immediately routed for compliance review or flagged for escalation, all in real time. Manual intervention fades from the critical path.

In decision-heavy areas like finance, risk models ingest transaction tables and output a decision: approve, hold, or trigger an audit. Every transaction is scored on multidimensional vectors — amount, historical velocity, device fingerprint, anomaly signatures — so decisions are made at the point of entry. Anomalies generate structured payloads that flow automatically to investigation pipelines.

Order management flows use classifiers to identify shipment mismatches, incomplete packing data, or regulatory inconsistencies. The model’s decision initiates a branch: delay, reroute, or auto-resolve, all mapped to corresponding ERP or WMS actions, without waiting for human review.

Technically, every classifier sits as a node in the runtime graph — executing, logging, and versioning every decision. Outputs are API-driven, payloads are structured for traceability, and every branch is observable in the orchestration layer. Inference becomes a trigger; the system moves with each model’s response.

Step 4. Let Vision Systems Drive the Flow

Cameras no longer just observe — they participate. Each visual input becomes a functional unit, interpreted instantly and acted on without buffering or delay.

In manufacturing, live feeds identify production flaws as they form — edge misalignments, heat variations, assembly gaps. The system adjusts on the fly: rerouting units, halting equipment, updating digital twins. Every anomaly is traced, versioned, and tied to its origin point.

In logistics, shelf and floor scans register product movement in real time. Model outputs inform stocking, bundling, and dispatch — dynamically, without pause.

Inspection systems run multi-modal analysis, integrating color, depth, and heat. Flags become forward signals — triggering process changes, supplier checks, or immediate quarantines.

Step 5. Build Orchestration as Infrastructure

Infrastructure evolves from static plumbing to the operational core.

Model outputs are events. Orchestration is the protocol. Everything else is drag.

To illustrate the concept, inference triggers a call. FastAPI endpoint receives the payload — entity, decision, vector, timestamp. Orchestration parses. Routing table selects downstream target: ERP, WMS, CRM, MES. Every node expects an event, not a summary. Each path defines state change.

In other words, handlers execute with constraint: retry count, error branch, idempotent hash. Exception route tags incident, launches replay, logs the artifact in the audit mesh. From there, the success path updates the business object, confirms by response vector, and moves execution to the next branch.

Every action enters observability. Event log writes model version, decision cause, execution latency, and rollback route. Monitoring agents track event flow, signal drift, and SLA breach. No batch, no polling — only atomic updates, streamed and timestamped.

Integration is live. Every inference means transaction, allocation, update, trigger. No alert waits. No approval loop. Decision enters the system state, visible and replayable in full lineage.

Put simply, infrastructure’s true value is measured by how much gets done without intervention.

Step 6. Instrument context and memory

Context modules control the execution like a living substrate. Each action writes a line — units, goals, constraints, correction status — to a session-bound memory graph. When a new event arrives, the system adds historical context, including the last interaction, open exceptions, domain-specific settings, and unresolved edges.

Behind the scenes, vector stores link each relevant artifact: customer record, previous form status, invoice sequence, anomaly resolution, and regulatory flag. Entity managers retrieve attributes — such as names, dates, and identifiers — across multiple workflows and cross-channel interactions.

Next, correction logic is triggered through persistent memory. The system replays unresolved branches, displays incomplete approvals, and adds the handling of previous anomalies as evidence for the next branch. Each decision contains a callback: Prompt Augmentation, Constraint Reinforcement, and Entity Anchoring.

Workflows with a long time horizon benefit from the summary. State Distillation compresses the session history, marking only the most essential steps and results that are available for immediate callback or future orchestration. Storage enables agent-based AI to adapt, escalate ,and redirect without losing continuity and supports both real-time and asynchronous execution across distributed teams and subsystems.

In particular, every override, escalation, and fallback passes through the context network, time-stamped, assigned, verifiable for compliance, and weighted for iterative learning. The context memory forms the living backbone: decision history, operating status, test log ,and business logic, all leading to one result: an execution that is never reset and is constantly evolving.

What to Expect: ROI Benchmarks from 2025 Data

In fact, Intelligent Automation delivers quantifiable advantage across every layer of operations. The 2025 landscape favors companies that execute with architectural clarity.

Cycle Time Compression

On average, execution windows contract. Claims and approvals move through a single inference loop — document is scanned, intent extracted, exceptions classified, and decision routed within minutes. In logistics, export documentation and certificate bundles are generated in a single automated stream: upload, validation, and export — all tracked at every stage. Manufacturing lines tighten feedback cycles; QA and predictive maintenance models reduce stoppage duration by over 60%.

Manual Effort Reduction

More importantly, models replace routine judgment. Entity extraction, tabular classification, and live vision monitoring eliminate redundant reviews and manual escalations. In real cases, export logistics shrinks FTE demand for document control from two per shift to a single, self-healing pipeline. Finance teams repurpose efforts from checking to optimizing.

Error Rate and Data Quality

Structured inference means fewer edge-case errors. Automated routing, memory-driven resolution, and audit-anchored handoffs keep exception handling inside the workflow. In manufacturing, QA vision models maintain sub-1% rework rates — every defect logs with batch, timestamp, and source, feeding model retraining and process improvement. False positives in fraud detection drop by up to 40% as entity history and constraint memory refine each decision.

Cost-to-Serve and Productivity

Cumulatively, labor hours are redeployed away from manual review toward analysis, optimization, and service innovation. Transaction costs fall, and process bottlenecks shift from people to models. AI-first orchestration unlocks latent system capacity — more shipments per shift, faster claims closeout, and zero idle time at touchpoints. In real-world projects, internal benchmarks indicate a 21-35% reduction in operational costs per case.

Auditability and Compliance

Every execution is logged, including model version, confidence score, routing path, and override. Exception events and manual interventions are traced to specific payloads and system states. For regulated industries, this transparency streamlines both compliance checks and root cause audits.

Payback Period

In our experience, mid-size organizations routinely achieve ROI within 11 months. Best-in-class cases recover initial investment within 6-7 months, as each new integration magnifies system-wide effect.

This is when the full picture comes into focus.

Your Intelligent Automation Readiness Checklist

Execution relies on one layer: system readiness. High-performing automation stacks share a pattern — callable APIs, clear data lineage, embedded accountability.

This checklist captures those foundation signals. Each line reflects patterns we’ve seen in stable, high-throughput deployments: callable interfaces, traceable ownership, clean event propagation.

| Layer | Readiness Signals | Quick Stress Test | First Fix if Fail |

| Technical | • Core platforms expose real-time APIs (REST/gRPC) • OCR + parsing hit ≥ 95% field accuracy on inbound docs • Event broker streams model outputs < 500 ms • Feature/vector store versions entities & embeddings |

Upload a sample PDF → receive structured JSON → auto-write to staging DB. | • Stand up an API gateway • Swap to layout-aware OCR • Deploy Kafka/NATS for event flow |

| Operational | • “Entropy map” shows hotspots (≥ 20% manual forks) • Orchestrator (Temporal / Prefect / Airflow) manages retries & rollbacks • Single dashboard tracks cycle time, touchpoints, and rework |

Pick top process → count touches & measure time-to-close. Target: < 5 min, ≤ 3 touches. | • Tag forks in logs • Add idempotent tasks & SLA timers • Surface metrics in shared BI |

| Cultural & Governance | • Data Owner ≠ Process Owner, both named • Weekly drift review feeds retrain backlog • Audit log captures “Inference → Action” with model version & payload |

Ask “Who signs off model vX in prod?” — instant answer = pass. | • Define RACI for every model • Launch a drift Kanban • Pipe audit events to SIEM/ELK |

Sum Up

What you’ve just seen is more than a blueprint — it’s a velocity engine. Every classifier, every routing node, every memory vector exists to do one thing: move work forward with precision.

Execution no longer waits. It listens, adapts, acts. And the systems built this way? They scale without negotiation.

So the question isn’t whether to start. It’s where.

If you’re mapping that first move — or validating the ground before you build — Devox team can step in fast.

Frequently Asked Questions

-

How can I identify processes within my company where orchestration would have the most excellent compounding effect?

Look for tasks where every exception requires coordination, such as multiple emails, approvals, or workarounds. That’s usually where business logic has drifted outside the system. Intelligent Automation doesn’t just patch that — it reabsorbs it into the structure.

-

We already use RPA and some basic scripts. What’s the tipping point to go beyond that?

RPA moves clicks. Intelligent Automation moves the flow. The shift begins when you need continuity across systems, memory across calls, and decision logic that adjusts to live inputs. If your team spends more time rerouting than executing, it’s time to reassess.

-

How do I know our tech stack is “ready enough”?

Don’t aim for perfection. Aim for pathways. If your key systems expose APIs, if you can route events, and if your documents can be reliably parsed, you already have the foundation. From there, precision compounds.

-

What role should my Ops team play in this?

More than operators — they become curators of flow. It’s their process memory, their SLA experience, and their edge-case pattern recognition that shapes model behavior. Implementation isn’t tech-only. It’s shared authorship.

-

How do we start small, but real?

Select one high-volume process with frequent exceptions and clearly defined inputs. Don’t automate around it — automate through it. Instrument the flow, embed classifiers, and log decisions. Let that pilot carry its own metrics. From there, credibility spreads.

-

Who governs this once it’s live?

Think beyond IT. Intelligent Automation needs cross-functional governance: model owners, drift reviewers, escalation logic curators. The models learn from structure, and the structure is yours to evolve.