Table of content

Imagine trying to build a jet engine in mid-flight — with half the parts still running on typewriters and the rest on quantum logic. This is what automation will feel like in 2025. The surface is buzzing with progress, but underneath, the design is collapsing under its own weight. Not because of speed, but because of form.

Legacy systems still move, but not because they’re solid — they persist like muscle memory. The gap isn’t speed; it’s structure, and this playbook closes it. You’ll see where fragmentation drains revenue, how schema-aligned intake eliminates re-keying, and why versioned decision payloads bake auditability into every action. Next, we map orchestration patterns that stretch across ERP, CRM, and MES, turning task counts into end-to-end throughput and unlocking scalability without code rewrites or risky cutovers.

Precision anchors the system. Let’s rebuild execution together.

2025 Automation Landscape: Why Execution Demands Architectural Precision

Now we’re getting to the heart of the system.

To illustrate the concept, let’s start with what still breaks in 2025. Legacy systems function by habit rather than by sound architecture. Business logic sits in spreadsheets. Workarounds flow through inboxes. Control exists in memory, not in execution. In 2025, this friction compounds across cycle time, exception handling, and SLA integrity.

Operational bottlenecks arise from repetition: the same task is handled differently across shifts. The same request is re-entered into multiple systems. Decision-making slows beneath layers of routing, review, and recovery. Execution has lost its structure.

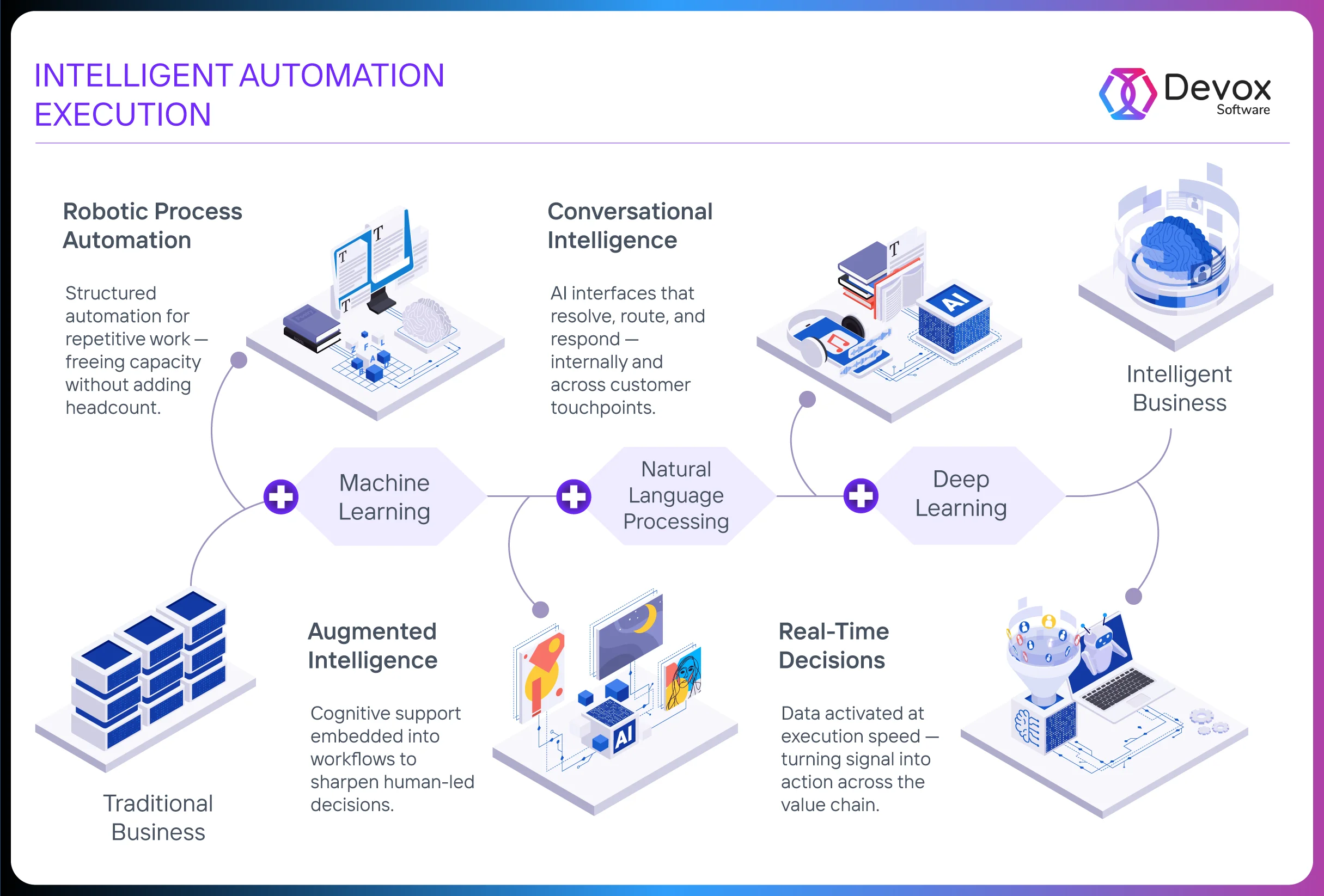

In short, the solution is not faster human effort but better architecture. In simple terms, for anyone wondering what is intelligent automation, it introduces a structural alternative. Language models resolve ambiguity at the point of entry, and tabular classifiers define routing based on risk, value, and urgency. Context memory holds constraint, state, and lineage across turns. Action flows through orchestration — composable, governed, observable.

This isn’t digitization — it’s the architectural shift that enables scalable intelligent automation use cases across finance, logistics, and operations. Precision moves first: where inputs become schema, where models bind to outcomes, where throughput scales through signal, not coordination.

In high-frequency operations, execution speed compounds. Model-driven systems resolve on entry. Audit logs form in parallel. Decision paths harden into reusable flows. Mid-sized firms shift posture — from reactive oversight to engineered continuity.

Pinpointing Execution Friction in Legacy Systems

Breakdown accumulates at junctions. Where inputs drift beyond schema, where exceptions outpace resolution logic, and where human judgment fills the execution gap — those are the critical seams.

Finance workflows fork midstream. Document formats vary across jurisdictions, and entity structures are fragmented between KYC, AML, and onboarding pipelines. Risk-scoring pipelines stall on incomplete vectors. Exceptions circulate without resolution, lacking payloads, routing logic, and closure deadlines.

Logistics execution stretches across asynchronous systems. Scanned forms, manual overrides, and unindexed documents enter orchestration layers that are unequipped to handle variance. Each shipment becomes a custom flow. Each deviation routes into inbox-based triage. The system continues, but progress thins.

In manufacturing, machine telemetry delivers signal — vibration shape, thermal slope, speed variance — but orchestration lacks the boundary to act. QA flags accumulate. Yield loss compounds between detection and response. Inventory logic depends on buffer zones rather than flow prediction.

Across domains, structure dissolves where platforms rely on static rules and implicit handovers. The cost is driven by cycle time, rework, and decisions suspended between tools. Precision leaks occur at the edges, and scale folds are evident at the joints.

This is where intelligent automation comes into play. By recognising pattern entropy, metadata collisions and orchestration delays, it highlights the execution gaps. And this is precisely the point at which automation comes into its own.

Modernization Paths: Rehost, Replatform, Refactor, Re-Architect — and Where AI Changes the Game

And this is where the rubber truly meets the road. Now let’s break down the modernization paths step by step.

Rehost: Precision Lift with Runtime Continuity

Rehosting redraws infrastructure boundaries — without rewriting the execution core. However, in 2025, this shift requires more than environmental replication. It requires runtime mapping, dependency closure, and signal preservation.

Inference-led discovery profiles each service. Models extract system call graphs, resource telemetry, and port-layer interaction patterns across historical runs. Each instance becomes a behavioral trace characterized by CPU burst rate, memory volatility, and I/O burst co-occurrence. These are compressed into move vectors — execution signatures matched to optimal target shapes.

At the same time, configuration extractors pull kernel settings, driver layers, and linked binaries. Then, compatibility engines run diff-matching across OS versions, library bindings, and network stack settings. Where mismatches emerge, drift zones are flagged and directed to automated mitigation paths — registry patch, container isolation, or policy inheritance — depending on the fault likelihood.

To make this work, orchestration must operate in near-real time. Once rehosted, orchestration syncs live services through observability relays. Each call logs the latency vector, error path, and state hash. Signal fidelity checks run in sequence: heartbeat, SLA compliance, anomaly surface. The system doesn’t pause — it measures movement against expected behavioral baselines and triggers reallocation before the thresholds are breached.

In finance and logistics, rehosting becomes a prerequisite for downstream AI. You align execution layers with event speed, data placement with ingestion models, and uptime with orchestration frequency. The system moves. The logic holds. And every signal arrives where AI can read it, act on it, and loop its result back into the control loop.

Here’s where things start to get more interesting.

Replatform: AI-Accelerated Lift to Modular Ground

Replatforming transitions legacy applications from fixed infrastructure to cloud-ready runtime environments. But in 2025, that shift evolves — intelligent automation begins to align execution logic with composable, observable, and latency-aligned architecture during the transition.

To begin with, each service boundary becomes machine-readable. Transformer models extract API intent, decode overloaded calls, and group side-effect functions into clean segments. Rather than lifting system code directly into containers, automation tools observe behavior under load — logging memory patterns, startup dependencies, and resilience signatures. These data points become inputs into container orchestration logic, defining how to assemble, deploy, and monitor each unit.

During schema transition, automation parses database calls, identifies anti-patterns, and rewrites joins, filters, and triggers into cloud-native equivalents. Instead of manually building middleware to bridge the new and old, orchestration engines connect services through AI-generated routing layers, which are aware of process tolerance, call frequency, and response time entropy.

Crucially, testing begins early. AI-generated test cases simulate production edge states before the platform shift completes. Each simulation scores for drift, regression, and degradation. When failures arise, they feed into reconfiguration loops — tightening performance before production rollout.

In short, replatforming in 2025 isn’t lift-and-ship. It’s runtime shaping, using inference, orchestration, and structured memory to align each function with its next execution state. AI doesn’t wrap the process. It constructs its runway — a practical Intelligent Automation definition expressed through architecture, not aspiration.

Refactor: From Business Logic to Executable Flow

Refactor imposes shape where logic sprawls. The goal isn’t surface-level syntax cleanup, but surgical reformation of execution boundaries. Telemetry reveals where latency accumulates and where logic branches proliferate without constraint anchoring. Importantly, these are not just code smells — they represent architectural distortions that reduce throughput and obscure ownership.

To address this, model-aided analyzers deconstruct monoliths into decision paths. Each branch is weighted by recurrence, exception rate, and downstream impact. Transformer-based static code models annotate function behavior, expose redundant loops, and flag polymorphic ambiguity. In this context, refactoring here means isolating what moves the process, then binding it to orchestration.

Execution state becomes explicit. What once depended on context memory now writes to structure — anchoring the real Intelligent Automation meaning in traceable system behavior. Refactored modules expose API boundaries — scoped, observable, and retriable. Payloads carry constraint sets. Classifiers inject control logic. Errors propagate with lineage.

Refactor doesn’t pause the system. It rebases it — with live orchestration scaffolding, memory-persisted transitions, and executable fallbacks. Each component enters the runtime map with version, role, and resolution path embedded.

Ultimately, refactoring at this level creates the preconditions for Intelligent Automation: discrete actions, clean state, clear execution frames. The system stops just executing code — and starts making decisions.

That’s the tipping point.

Re-Architect: Engineering for Latency, Lineage, and Logic Flow

Re-Architect begins when process logic exceeds platform shape. At that point, legacy systems often centralize state, embed decisions in code, and treat exceptions as edge conditions. At scale, these patterns degrade throughput. Re-Architecture intervenes — not to preserve components, but to recode execution logic around signal, latency, and traceability.

To counter this, systems decompose into functionally aligned services. Each service holds domain logic, owns its data contracts, and operates under constraint-driven orchestration. Event brokers handle state propagation. Model outputs become triggers, not insights. Execution maps to flow — fast in the core, decoupled at the edges, reversible at every node.

Significantly, memory systems extend past session: vector stores link decisions to entities, embeddings to intent. Every branch holds recurrence weight, confidence band, and constraint lineage. Correction logic writes its own playbook — not via approval loops, but by inference-driven fallback paths.

At various layers, language models act upstream — parsing requests, structuring ambiguity, routing intent. Classifiers govern the midstream — mapping inputs to execution. Orchestration anchors the runtime — with retry branches, SLA timers, and rollback routes. Architecture isn’t a diagram. It’s a choreography of decision triggers and state change.

What emerges is this: Re-Architecture enables compound automation. Not by adding AI. By designing systems that execute like AI — signal-bound, latency-aware, memory-anchored, auditable.

With that frame in place, let’s now dive into the structural layers.

Structuring the Entry Point: From Entropic Inputs to Execution-Ready Payloads

Execution precision begins long before decision logic triggers. Rather, it starts where data enters — as entropy. And even in 2025, entry point for most legacy-bound processes still looks like scanned PDFs, blurry exports from mainframes, or Excel tables disguised as systems of record. That’s why Intelligent Automation doesn’t wait for data to become clean — it builds the scaffold on ingestion.

To make this feasible, layout-aware OCR engine reads each form not as image, but as structure: bounding boxes, fields, alignment patterns. Still, structure alone doesn’t resolve ambiguity. Transformer models track visual zones, language entropy, and domain-specific patterns. Whether it’s a Cyrillic invoice, freight form in Polish, or customs stamp in Turkish — is passed through multilingual token streams trained for signal over syntax.

Following this, entity anchoring begins. Dates become shipment milestones. Names resolve to vendor IDs. “Net” fields differentiate payment terms from cargo weight through positional logic and probabilistic priors.

A clear example of this in action: Devox teams operationalized this pipeline for European postal flows, where scanned routing slips in nine formats fed directly into a routing engine. Entity matchers pulled from both legacy schemas and external registries — ensuring that each shipment landed on the right node, in the right jurisdiction, with SLA timers running at ingestion, not at queue formation.

Let’s be clear: this isn’t preprocessing.It’s structured inference at the threshold. The models prepare each payload for action — resolving ambiguity, anchoring context, and ensuring that orchestration reads not just content, but intent.

This is the inflection point where architecture meets strategy.

Executable Decisions: Turning Model Output into System Change

Every model call reshapes execution. These aren’t suggestions — they’re structured decisions tied to action graphs.

Let’s start midstream. Tabular Classifiers operate midstream. A transport document enters: already parsed, entity-resolved, schema-aligned. The classifier ingests key fields — destination, cargo type, HS code, carrier tag — and returns a routing tier: auto-release, customs review, compliance hold. Risk scores bind to thresholds. Actions cascade — flag in ERP, notify export officer, log to audit mesh. Each label launches a route. Each branch holds consequence.

Consider finance. Transaction classifiers parse amount, timing, device fingerprint, and behavioral sequence. High-confidence anomalies trigger hold-and-review. Mid-band scores shift to secondary path — constrained by SLA and exposure window. Instead, acceleration is conditional, triggered by proximity to past fraud signatures in vector space.

When ambiguity enters, LLM Agents manage ambiguity. When inputs drift — unclear customer request, multipurpose form, inconsistent metadata — a domain-trained agent intervenes. Context memory loads prior state. Prompt heads resolve intent. Fallback routing activates only if constraint fails. If intent is extractable and the path valid, the action proceeds — update client record, escalate SLA, reroute shipment. Memory ensures continuity. Ambiguity doesn’t stall — it resolves through sequence.

To illustrate, Devox deployed this model-agent framework at Magma Trading, where agents processed multilingual KYC packets in real time. Names aligned with internal anchors, conflicts resolved through callback logic — with every override versioned and auditable.

In logistics environments,shelf scanners classify SKU fill rate and generate real-time updates for WMS. Underfill triggers reorder. Overfill flags bundling candidate. Execution routes flow automatically from model hash to system call — no polling, no supervision.

So when we talk about “the system,” we mean more than software. In this playbook the word system refers to the full automation spine that joins AI models, orchestration, і legacy cores into one continuous run.

- Inference layer ‒ OCR, LLMs, vision and tabular models translate raw inputs into structured, enriched data.

- Decision layer ‒ rules engines and classifiers attach confidence, lineage and risk weight, then wrap the output as an event payload.

- Orchestration layer ‒ an event mesh (Kafka / NATS) plus workflow runtime (Temporal / Prefect) reads that payload, launches the precise branch, tracks retries, writes audit lines.

- Legacy adapters ‒ slim API or queue workers relay each event to existing ERP, WMS, MES, or CRM modules, updating records, firing halts, issuing releases.

The path looks simple: Input → Model → Payload → Event → Business Action.

Every cell in the table shows which legacy domain receives the call, which micro-service executes it, and which guardrails keep the flow reversible and observable.

| Input | Model | Decision Payload | Trigger | Business Impact |

| Cross-border CMR PDF (European Post) |

Layout-OCR ➜ Tabular Classifier | {routeTier:”Auto-clear”} | ERP: release shipment | –80 % cycle time |

| KYC packet, multi-lingual (Magma Trading) |

LLM Agent + Entity Resolver | {riskScore:0.12} | Compliance API: instant approve | –35 % lower operating costs |

| Heated frame on the production line | Vision Anomaly CNN | {defect:”Fill-deviation”} | MES: halt station + alert | –60 % scrap |

| Chat session (Telecom chatbot) |

Intent LLM + Sentiment | {intent:”Plan-upgrade”} | CRM: auto-ticket, tier 1 | Triage is five times faster |

| SQL telemetry burst (Legacy billing) |

Drift Detector | {cpuSpike:”query-X”, prob:0.94} | Orchestrator: scale replica | zero outage minutes |

Now we can see the bigger picture.

Building the Orchestration Layer for Reliable System Movement

Let’s clarify one thing upfront: inference without execution introduces latency. Automation scales only when model outputs trigger real events — inside a governed, observable orchestration layer. This backbone doesn’t replace legacy logic. It wraps it in protocols that absorb signal, map to state change, and drive continuity across hybrid systems.

Every decision enters as an event — typed, timestamped, entity-bound. A FastAPI or gRPC handler receives the payload: classifier verdict, LLM intent, vision stream ID. Orchestration engines like Temporal or Prefect bind this to execution logic: retry path, SLA guardrail, rollback route, and downstream system target.

What’s important here is continuity. Legacy systems stay online. Intelligent automation technology ensures model outputs wrap them in state change calls, keeping continuity intact. ERP record updates. WMS task assignment. CRM workflow initiation. Each action flows through the event mesh — Kafka, NATS, or platform-native bus — tagged by version, constrained by idempotency, logged with full trace.

When something fails, the system reacts instantly. If the WMS fails to consume a dispatch trigger, the orchestrator escalates: error queue, retry timer, operator alert. The incident logs the payload, source model version, and failure context. Decision state persists — recoverable, replayable, immutable.

In essence, orchestration here is infrastructure. It governs execution velocity, ensures real-time propagation, and protects against drift. So when AI decides, orchestration ensures those decisions translate into action — with built-in memory, policy, and accountability.

Ensuring Stability: Memory Systems and Model Drift Governance

In Intelligent Automation, continuity doesn’t come from model output alone — it emerges from how systems remember. Each execution writes a trace. Each trace feeds a session graph. And over time, these graphs anchor how decisions evolve.

What makes this powerful is that session memory modules structure more than state — they embed intent, constraints, and correction lineage into each flow. So when exceptions resurface, the system doesn’t restart; it recalls. Entities are rehydrated, unresolved branches resume, and prompt augmentation adapts based on vector-similar sequences. These aren’t logs. They’re decision scaffolds.

At the core of this memory system are vector stores. But not just for retrieval, but for inference augmentation, making them core enablers of intelligent automation technologies. Historical resolutions, flagged anomalies, SLA deviations — all indexed, weighted, and fed back into model input through relevance-ranked payloads. Classification becomes context-aware. Routing becomes history-bound. Even when an event looks new, chances are it has echoes in the graph.

Stability arrives through drift governance. Each model sits under monitoring. Feature distributions, outcome variances, confidence entropy — all plotted over time. When patterns deviate, alerts fire. When thresholds break, retraining pipelines trigger. Weekly model review councils align Product, Ops, and Engineering around resolution, not blame.

What’s more, feedback enters as structure: misroutes log with payloads, overrides flag by source, exceptions queue by class. Each retrain loop is scoped — only the affected segment adjusts. And because orchestration versions every model call, no lineage is lost — even as behavior evolves.

This layer — memory-bound, drift-aware, retrain-ready — doesn’t freeze models in time. It engineers their evolution. And that’s how execution scales: through decisions that don’t forget, and systems that adapt by design.

Frequently Asked Questions

-

How does orchestration ensure AI-driven decisions actually execute?

AI does not deliver value through inference alone. Its value lies in execution.

Orchestration is the contract between what the model sees and what the system does. Every decision — whether a routing code from a classifier, an extracted entity from a layout transformer, or a flag from a fraud model — enters the orchestration layer not as insight, but as instruction.

FastAPI endpoints receive structured payloads: entity, vector, decision path. From there, the orchestrator — Temporal, Prefect, or event-mesh-backed — routes each decision to its system target: ERP, CRM, MES, or logistics engine. Execution isn’t passive; it’s logged, versioned, retried, and audit-wired.

Each inference triggers a cascade: the action handler runs with constraint logic, exception branches activate on failure, and retry logic preserves idempotency. Audit logs capture model version, latency, decision cause, and rollback route — enabling compliance, debugging, and drift mitigation.

You are not waiting for alerts. You are advancing the process state. Model decisions become system transactions — visible in dashboards, traceable in logs, recoverable in real time.

In this architecture, intelligence isn’t something you review. It’s something that moves the system forward.

-

How do we manage exceptions when models yield uncertain outcomes?

Exceptions define the boundary of system intelligence. Each ambiguous output — whether from a classifier evaluating borderline cases or a vision model parsing partial alignment — enters as a structured event. Confidence intervals, source context, token lineage, and anomaly scores accompany the payload.

The orchestration layer routes these with precision. Decision branches predefine escalation logic: low-certainty classifications enter a human-in-the-loop queue with SLA tags, embedded diagnostics, and context-retrieval hooks. Exception handlers initiate side flows: resolution tracking, partial rollbacks, adaptive augmentation prompts.

Session memory tracks resolution lineage. Each override, fallback, or adjustment enters the retraining graph. Exception heatmaps surface recurring edges, feeding into weekly drift analysis and prompt-tuning cycles.

Exceptions serve as instrumentation. They expose signal thresholds, model fatigue, and architecture inflection points. Instead of halting flow, they guide refinement. Execution continues — measured, auditable, and designed to automate intelligent adaptations at the operational edge.

-

How does Intelligent Automation evolve after launch — what governs change and stability?

Execution stability begins with memory. Every model action, system decision, and exception override is written to a lineage graph. This graph isn’t archival — it’s operational, and it’s where we see what is Intelligent process automation truly means: decisions remembered, adapted, and reused.

Change enters through a signal. Vector stores register shifts in embeddings: entities appear with altered attributes, intents diversify, and phrasing shifts. These aren’t alerts — they’re indicators of structural movement inside the data stream.

Weekly drift review sessions analyze these changes. Stakeholders review statistical divergence, feature entropy, and anomaly clusters. Adjustments follow architecture: model retraining, prompt adaptation, constraint extension. Each change is versioned, and each impact is tested against baseline flow metrics.

Governance aligns clarity with evolution. RACI charts define ownership by model and by payload. Audit logs display “inference-to-action” traceability, including model ID, confidence, override route, and resolution path. No update is deployed without boundary testing across business-critical workflows.

Stability is maintained through motion: systems adapt, but under visible structure. What moves, learns. What learns, sharpens execution.

-

What does success look like within the first 90 days after the introduction?

The structured input lands cleanly at the first checkpoint. OCR adapts to the real variance. Entity extractors are anchored in the schema. Document types are resolved without operator tagging. The input is stabilized beforehand — no cascading exceptions, no latency during transmission.

On day 45, the execution branches behave like an infrastructure. Classifiers issue staged decisions with observable backtraces. Exceptions arrive in queues with cause vectors and processing index. SLA paths measure their own time. Each decision logs its weight, not just its result, helping organizations automate intelligent response paths.

On day 90, the orchestration absorbs the variance. Decision nodes are triggered across systems — CRM updates, ERP movements, WMS triggers — without external processing. The memory binds the inputs to the results. Repetitions are eliminated. Protocols feed drift indices, retrain signals, override lineage.

Success feels like taking the pressure off. No tickets pile up. No calls repeat. The execution sequences correspond to the system design — well thought out, fast, comprehensible. That’s when the real synergy effect begins.

-

How do legacy and modern systems talk without disruption?

They coordinate through events, not interfaces.

Each decision — whether surfaced by a classifier, vision stream, or LLM — enters as a structured payload: entity, vector, routing path, timestamp. Orchestration engines ingest this data and trigger downstream updates through predefined connectors. These connectors map to stable functions inside legacy modules — mainframe extract routines, certificate generators, reconciliation tasks — exposed as callable tasks via message queues or synchronous APIs.

The orchestration layer governs retries, parallelism, and fallback routing. Each event carries observability: model version, input source, expected outcome. Failures trigger replay through rollback paths. Success updates system state with precision.

Legacy systems retain control over state changes. Modern layers govern flow logic. The two remain decoupled yet synchronized — through timestamped events, idempotent endpoints, and version-aware contract schemas. There’s no interface duplication. Just function binding through event brokers with traceable lineage.

System stability emerges through coordination, not conversion. Each module performs in its domain. Cohesion is enforced not by code parity but by execution mesh. AI informs the flow. Orchestration enforces state.

-

What typical ROI can mid-size enterprises expect?

Execution accelerates. Coordination cost collapses. This is how Automation Intelligent strategy compounds value from process, not speculation.

In logistics, AI-driven document parsing and real-time routing compress cycle time across cross-border shipments. One client automated certificate preparation for 34 jurisdictions, reducing document handling time by over 80% and eliminating nearly all regulatory rework.

In finance, memory-enabled fraud detection and KYC classification clear inbound cases in milliseconds. Clients report 21–35% operational cost reduction per transaction, driven by the removal of handoffs, not headcount.

In manufacturing, predictive maintenance reshapes the economics of downtime. Line interruptions drop by over 60% where telemetry-based models preempt failure. Visual QA loops shrink rework rates below 1% with every correction feeding upstream learning.

Mid-size firms implementing AI-first orchestration typically see ROI within 11 months. Best-performing cases reach payback in 6–7 months, as initial integrations scale into compounding throughput. This isn’t an edge-case benefit — it’s load-bearing value: measured in shipped goods, cleared claims, and cycles saved.